On Amazon, when you buy rechargeable batteries, it is unlikely that it recommends a battery charger that is not in stock or can’t be delivered in a reasonable time. That’s because, even as Amazon’s AI teams build great recommender systems, its engineers, designers, and product managers figure out how the AI works with the rest of their systems in a way that customer satisfaction (and revenue, in the long term) is maximized.

Building AI for social good needs the same mindset.

(See the first post in this series – Lesson 1: User-centered? What about the beneficiaries, the choosers, and the payers?)

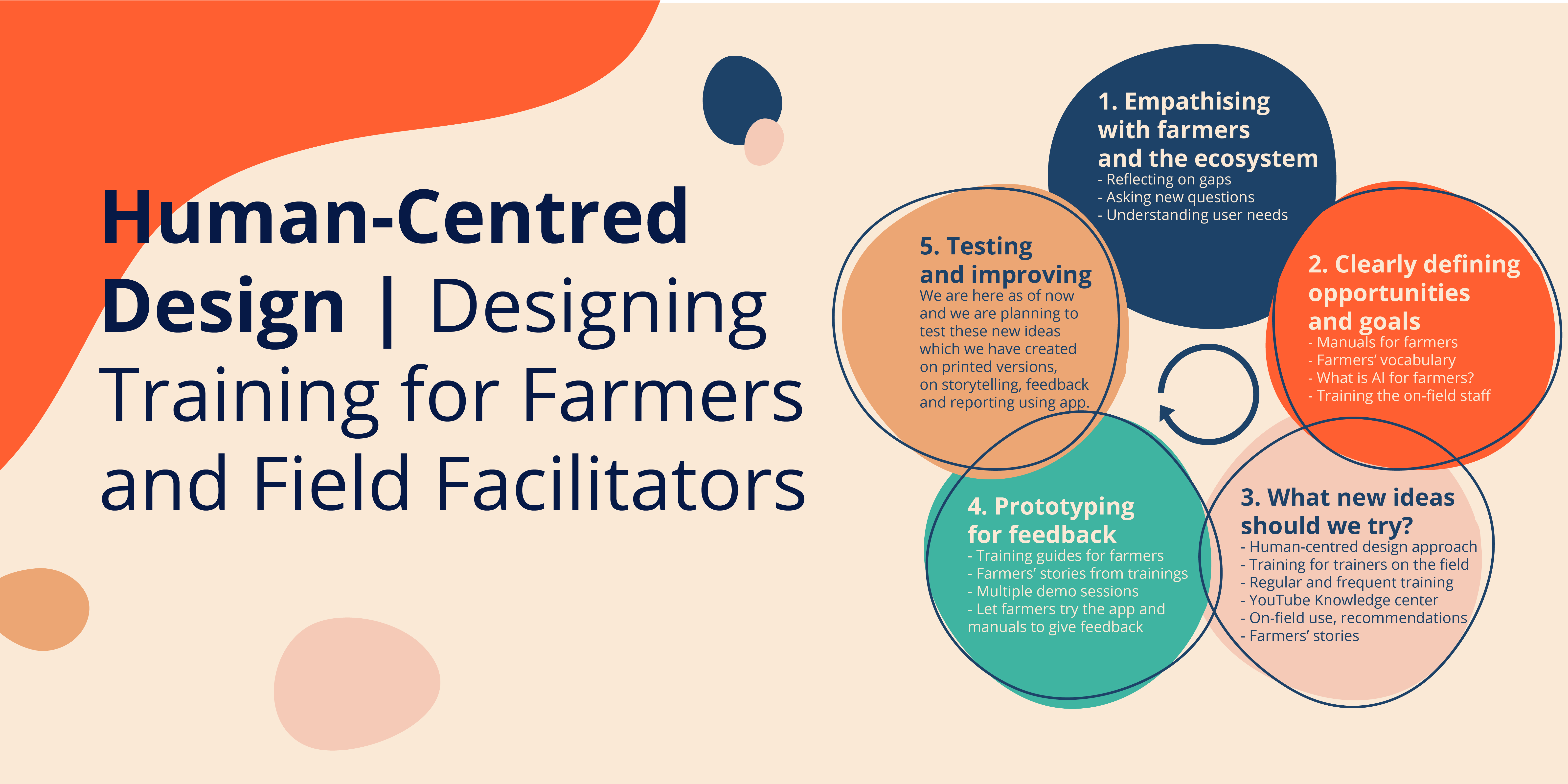

Let’s take the example of Wadhwani AI’s work related to pest management in cotton.* The AI we are building identifies and counts pests from photos of pest traps taken by lead farmers. AI’s output is used to provide timely advice on what pesticides to use, how much, and when. Problem solved? No!

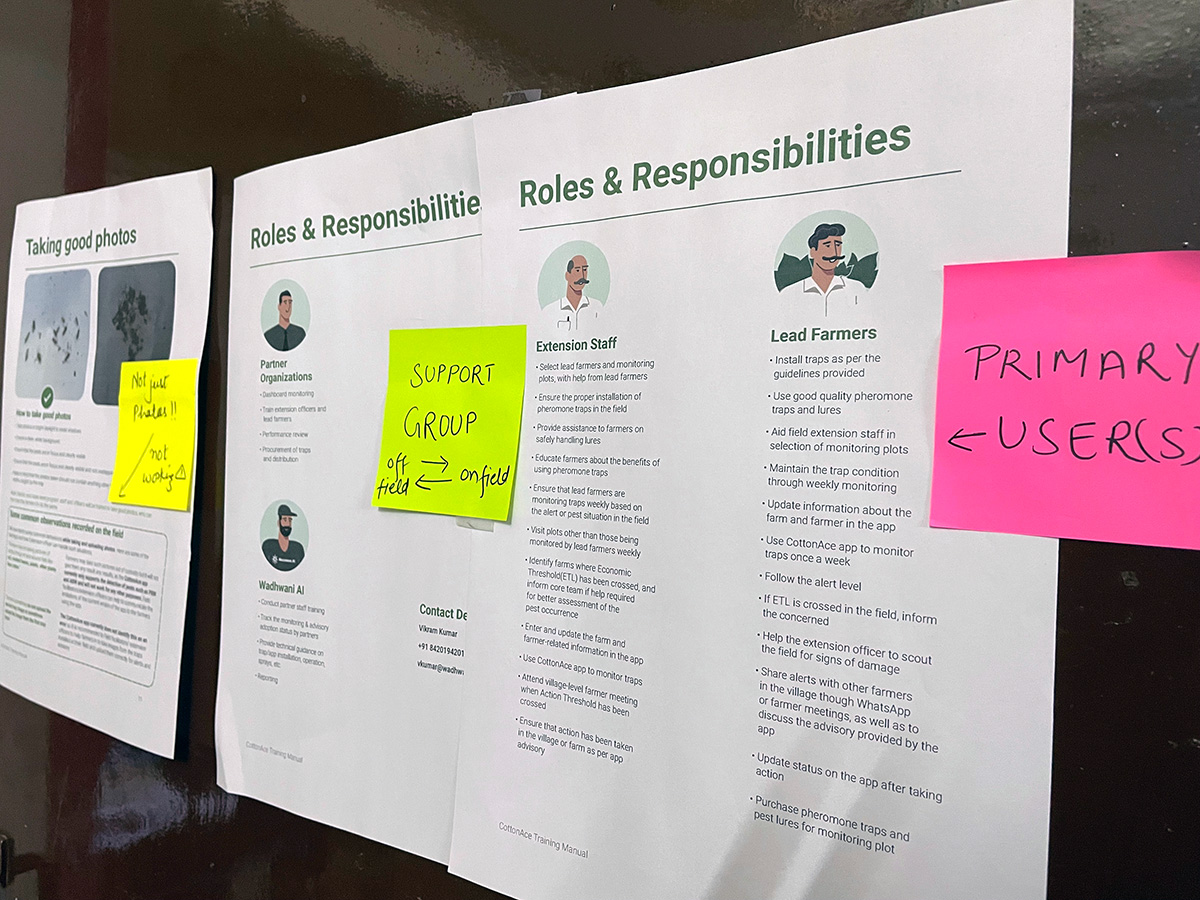

For this AI to make a difference, a few things have to happen both upstream and downstream from the app with AI. For example: Upstream, the pest traps must be set up and monitored; Downstream, the advice generated, say, spraying, or not spraying, pesticides, must be acted upon. Easier said than done.

In the case of Amazon, economic alignment of all stakeholders ensures a certain level of coordination and drives adoption of smoothly-functioning digital infrastructure (hardware, software, connectivity). It’s a virtuous cycle that, over time, makes it easy for product managers and designers to focus on smaller pieces of the large puzzle, with confidence that they can tackle/influence other pieces if required in service of the big picture.

In contrast, stakeholders’ interests in large scale programs — say, government health or agriculture programs — do not pull in the same direction. The pesticide supplier, a local monopoly of sorts, may encourage more pesticide usage, not less. The apps deployed for use by field staff are generally designed for ‘reporting’ purposes, not for making data useful for farmers. And, generally speaking, prescribed protocols and actual practice are rarely the same.

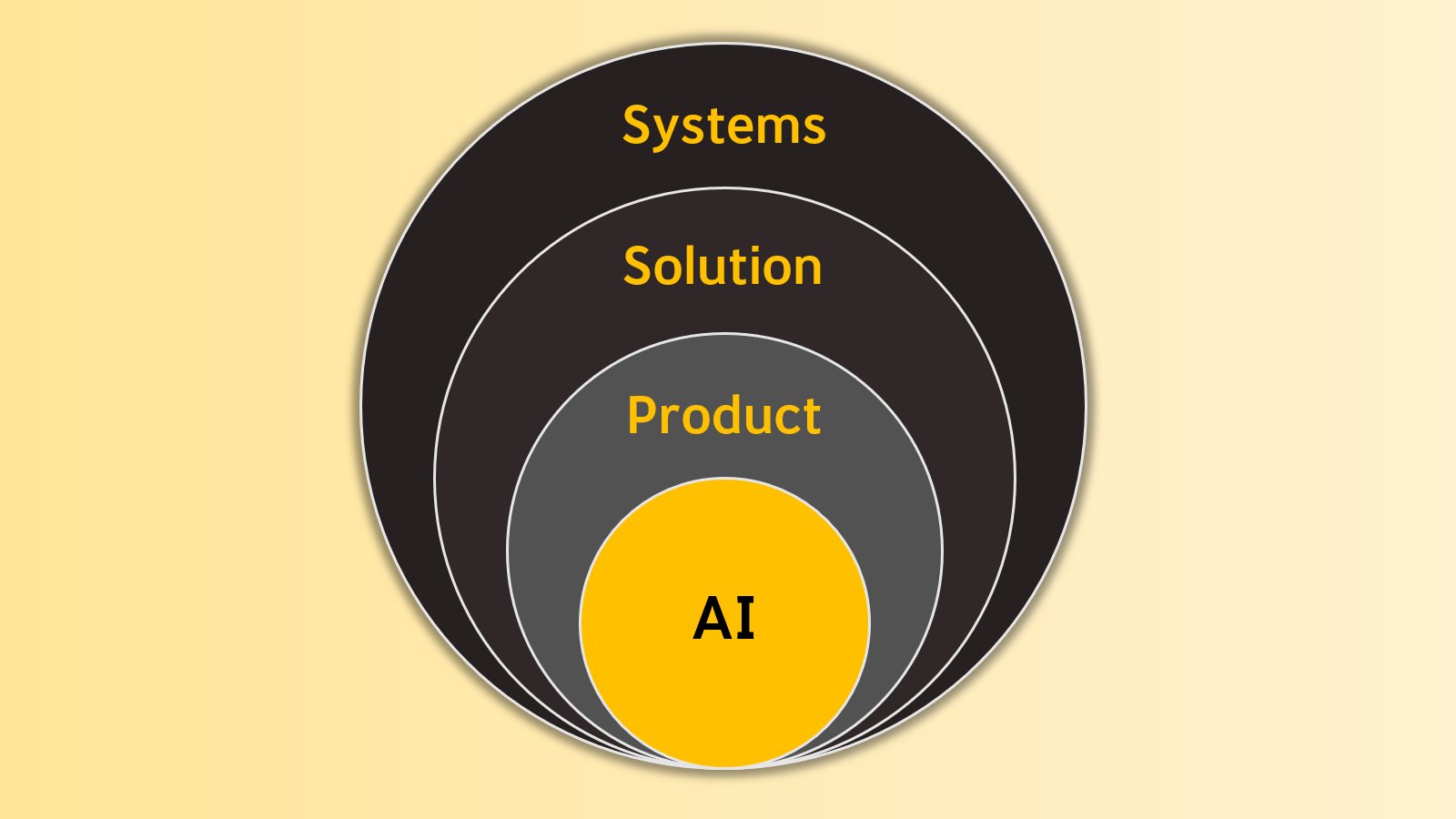

So, in social development settings, taking a solution perspective can seem daunting. It is tempting for technology innovators to focus on great AI or product, and hope that other systemic issues will get resolved in due course. But the ‘technology-for-good’ experience of the past decade serves as a warning. Government and NGO programs are groaning under the weight of additional work created by software in silos and semi-digitized workflows. Med-tech and ag-tech innovators bemoan programs’ inability to adopt their products for impact. Administrators lament innovators’ inability to see the big picture.

That is why, at Wadhwani AI, we don’t stop with asking whether an AI-based solution is possible and whether it can potentially make a big difference. We also look for partners — NGOs and governments — to co-create, iterate, pilot, and scale the said solution.

This focus on solution, as opposed to AI or product, is reflected in our innovation process. Our product managers write a ‘Solution Requirement Document’** not your typical PRD, i.e., product requirement document!

* Wadhwani AI was one of the winners of the global Google AI Impact Challenge for this work, but it’s still early days and we expect plenty of changes in the coming months as we learn from our field experiments.

** Solution Requirements Document (SRD) is a living document that evolves as we learn. Product managers write it in collaboration with programs, design, engineering and AI research, and with inputs from key partners externally.

P.S.: Scroll down for a side note on AI Research and Programs — two functions most product managers, designers, and engineers would not have worked closely with.

PSST! We are hiring. Write to us if you are a world-beating product manager or design researcher: careers@wadhwaniai.org. Don’t forget to mention this blog!

Side note on Programs and AI Research functions

It may be worth explaining the roles of Programs and AI Research in our innovation process, because they are two functions typically not seen in most product teams.

Programs is like external operations and business development functions rolled into one. They are closest to customers and users in the social development world. They take the lead in engaging governments and NGO programs, accessing/collecting data, finding relevant domain expertise (say, entomologists or epidemiologists), running field experiments, and so on. They help answer questions like: What are the key systemic constraints to keep in mind? Are workflow modifications possible? Can we collect this data? Who should we partner with? What is the path to scale?

AI Research team, besides building AI at the core of a solution, help rest of the team understand what’s possible (or not) and what new research directions are worth pursuing. They keep abreast of the latest research, which is usually ahead of industry practice. They help rest of the team understand: What data are required? How might current workflows need to change? How should we evaluate new models before scaling?

Coming Up:

We will talk about internal stakeholders (AI researchers, engineers, program managers, and leadership) in subsequent posts. Sign up here to receive updates about new articles in this series.

Lesson 3: Data, data everywhere. Not a drop to drink.

Lesson 4: AI research is iterative. Very iterative.

Lesson 5: UI for AI. AI for UI.

Lesson 6: Agile with clay feet